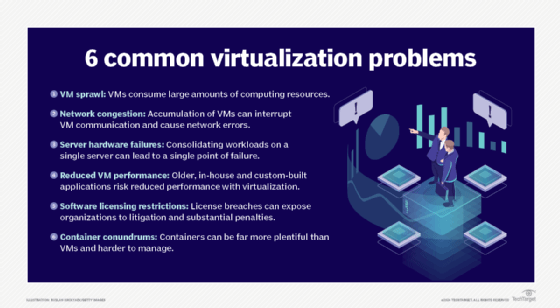

6 common virtualization problems and how to solve them

Organizations can correct common problems with virtualization, such as VM sprawl and network congestion, through business policies rather than purchasing additional technology.

IT administrators often have to deal with virtualization problems, such as VM sprawl, network congestion, server hardware failures, reduced VM performance, software licensing restrictions and container issues. But companies can mitigate these issues before they occur with lifecycle management tools and business policies.

Server virtualization brings far better system utilization, workload flexibility and other benefits to the data center. But, in spite of its benefits, virtualization isn't perfect: The hypervisors themselves are sound, but the issues that arise from virtualization can waste resources and drive administrators to the breaking point.

Here are six common virtualization issues admins encounter and how to effectively address them.

1. VM sprawl wastes valuable computing resources

Organizations often virtualize a certain number of workloads and then have to buy more servers down the line to accommodate more workloads. This occurs because companies usually don't have the business policies in place to plan or manage VM creation.

Before virtualization, a new server took weeks -- if not months -- to deploy because companies had to plan a budget for systems and coordinate deployment. Bringing a new workload online was a big deal that IT professionals and managers scrutinized. With virtualization, a hypervisor can allocate computing resources and spin up a new VM on an available server in minutes. Hypervisors include offerings such as Microsoft Hyper-V and VMware vSphere, along with a cadre of well-proven open source alternatives.

However, once VMs are in the environment, there are rarely any default processes or monitoring in place to tell if anyone needs or uses them. This results in VMs that potentially fall into disuse. Yet, those abandoned VMs accumulate over time and consume computing, backup and disaster recovery resources. This is known as VM sprawl, and it's a phenomenon that imposes operational costs and overhead for those unused VMs but brings no tangible value to the business.

Because VMs are so easy to create and destroy, organizations need policies and procedures that help them understand when they need a new VM, determine how long they need it and justify it as if it were a new server. Organizations should also consider tracking VMs with lifecycle management tools. There should be clear review dates and removal dates so that the organization can either extend or retire the VM. Other tools, such as application performance management platforms, can also help gather utilization and performance metrics about each workload operating across the infrastructure.

All this helps tie VMs to specific departments, divisions or other stakeholders so organizations can see exactly how much of the IT environment that part of the business needs and how the IT infrastructure is being used. Some businesses even use chargeback tactics to bill departments for the amount of computing they use. Chances are that a workload owner that needs to pay for VMs takes a diligent look at every one of them.

2. VMs can congest network traffic

Network congestion is another common problem. For example, an organization that routinely runs its system numbers might notice that it has enough memory and CPU cores to host 25 VMs on a single server. But, once IT admins load those VMs onto the server, they might discover that the server's only network interface card (NIC) port is already saturated, which can impair VM communication and cause some VMs to report network errors or suffer performance issues.

Before virtualization, one application on a single server typically used only a fraction of the server's network bandwidth. But, as multiple VMs take up residence on the virtualized server, each VM on the server demands some of the available network bandwidth. Most servers are only fitted with a single NIC port, and it doesn't take long for network traffic on a virtualized server to cause a bottleneck that overwhelms the NIC. Workloads sensitive to network latency might report errors or even crash.

Standard Gigabit Ethernet ports can typically support traffic from several VMs, but organizations planning high levels of consolidation might need to upgrade servers with multiple NIC ports -- or a higher-bandwidth NIC and LAN segment -- to provide adequate network connectivity. Organizations can sometimes relieve short-term traffic congestion problems by rebalancing workloads to spread out bandwidth-hungry VMs across multiple servers.

As an alternative rebalancing strategy, two bandwidth-hungry VMs routinely communicating across two different physical servers might be migrated so that both VMs are placed on the same physical server. This effectively takes that busy VM-to-VM communication off the LAN and enables it to take place within the physical server itself -- alleviating the congestion on the LAN caused by those demanding VMs.

Remember that NIC upgrades might also demand additional switch ports or switch upgrades. In some cases, organizations might need to distribute the traffic from multiple NICs across multiple switches to prevent switch backplane saturation. This requires the attention of a network architect involved in the virtualization and consolidation effort from the earliest planning phase.

3. Consolidation multiplies the effect of hardware failures

Consider 10 VMs all running on the same physical server. Virtualization provides tools such as snapshots and live migration that can protect VMs and ensure their continued operation under normal conditions. But virtualization does nothing to protect the underlying hardware. So, what happens when the server fails? It's the age-old cliche of putting all your eggs in one basket.

The physical hardware platform becomes a single point of failure and affects all the workloads running on the platform. Greater levels of consolidation mean more workloads on each server, and server failures affect those workloads. This is significantly different than traditional physical deployments, where a single server supported one application. Similar effects can occur in the LAN, where a fault in a switch or other network gear can isolate one or more servers -- and disrupt the activity of all the VMs on those servers.

In a properly architected and deployed environment, the affected workload fails over and restarts on other servers. But there is some disruption to the workload's availability during the restart. Remember that the workload must restart from a snapshot in storage and move from disk to memory on an available server. The recovery process might take several minutes depending on the size of the image and the amount of traffic on the network. An already congested network might take much longer to move the snapshot into another server's memory. A network fault might prevent any recovery at all.

There are several tactics for mitigating server hardware failures and downtime. In the short term, organizations can opt to redistribute workloads across multiple servers -- perhaps on different LAN segments -- to prevent multiple critical applications from residing on a single server. It might also be possible to lower consolidation levels in the short term to limit the number of workloads on each physical system.

Over the long term, organizations can deploy high availability servers for important consolidation platforms. These servers might include redundant power supplies and numerous memory protection technologies, such as memory sparing and memory mirroring.

These server hardware features help to prevent errors or, at least, prevent them from becoming fatal. The most critical workloads might reside on server clusters, which keep multiple copies of each workload in synchronization. If one server fails, another node in the cluster takes over and continues operation without disruption. IT infrastructure engineers must consider the potential effect of hardware failures in a virtualized environment and implement the architectures, hardware and policies needed to mitigate faults before they occur.

4. Application performance can still be marginal in a VM

Organizations that decide to move their 25-year-old, custom-written corporate database server into a VM might discover that the database performs slower than molasses. Or, if organizations decide to virtualize a modern application, they might notice that it runs erratically or is slow. There are several possibilities when it comes to VM performance problems.

For older, in-house and custom-built applications, one of the most efficient ways to code software is to use specific hardware calls. Unfortunately, simple lift-and-shift migrations can be treacherous for many legacy applications. Any time organizations change the hardware or abstract it from the application, the software might not work correctly, and it usually needs to be recoded.

It's possible that antique software simply isn't compatible with virtualization; organizations might need to update it, switch to some other commercial software product or SaaS offering that does the same job or continue using the old physical system the application was running on before. But none of these are particularly attractive or practical options for organizations on a tight budget.

Organizations with a modern application that performs poorly after virtualization might find the workload needs more computing resources, such as memory space, CPU cycles and cores. Organizations can typically run a benchmark utility and identify any resources that are overutilized and then provision additional computing resources to provide some slack. For example, if memory is too tight, the application might rely on disk file swapping, which can slow performance. Adding enough memory to avoid disk swapping can improve performance. In some cases, migrating a poorly performing VM to another server -- perhaps a newer or lightly used server -- can help address the problem.

Whether the application in question is modern or legacy, testing in a lab environment prior to virtualization could have helped identify troublesome applications and given organizations the opportunity to discover issues and formulate answers to virtualization problems before rolling the VM out into production.

5. Software licensing is a slippery slope in a virtual environment

Software licensing was always confusing and expensive, but software vendors have quickly caught up with virtualization technology and updated their licensing rules to account for VMs, multiple CPUs and other resource provisioning loopholes that virtualization enables. The bottom line is that organizations can't expect to clone VMs ad infinitum without buying licenses for the OS and the application running in each VM.

Organizations must always review and understand the licensing rules for any software that they deploy. Large organizations might even retain a licensing compliance officer to track software licensing and offer guidance for software deployment, including virtualization. Organizations should involve these professionals if they are available. Modern systems management tools increasingly provide functionality for software licensing tracking and reporting.

License breaches can expose organizations to litigation and substantial penalties. Major software vendors often reserve the right to audit organizations and verify their licensing. Most vendors are more interested in getting their licensing fees than litigation, especially for first offenders. But, when organizations consider that a single license might cost thousands of dollars, careless VM proliferation can be financially crippling.

6. Containers can lead to conundrums

The emergence of virtual containers has only exacerbated the traditional challenges present in VMs. Containers, such as those used through Docker and Apache Mesos, are essentially small and resource-efficient VMs that can be generated in seconds and live for minutes or hours as needed. Consequently, containers can be present in enormous numbers with startlingly short lifecycles. This demands high levels of automation and orchestration with specialized tools, such as Kubernetes.

When implemented properly and managed well, containers provide an attractive and effective mechanism for application deployment -- often in parallel with traditional VMs. But containers are subject to the same realities of risk and limitations discussed for VMs. When you consider that containers can be far more plentiful and harder to manage, the challenges for containers demand even more careful attention from IT staff.

Server virtualization has changed the face of modern corporate computing with VMs and containers. It enables efficient use of computing resources on fewer physical systems and provides more ways to protect data and ensure availability. But virtualization isn't perfect, and it creates new problems that organizations must understand and address to keep the data center running smoothly.

Stephen J. Bigelow, senior technology editor at TechTarget, has more than 20 years of technical writing experience in the PC and technology industry.