Autonomous AI agents: A progress report

Now in the early stages of development, AI agents using LLMs might one day number in the billions, operate networks of interconnected ecosystems and alter the commercial landscape.

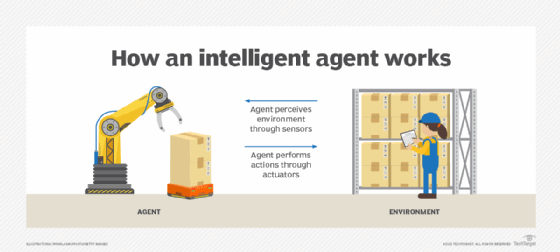

Agent-based computing and modeling have been around for decades, but thanks to recent innovations in generative AI, researchers, vendors and hobbyists are starting to build more autonomous AI agents. While these efforts are still in their early stages, the goal long-term is for more self-driving autonomous robotic process automation bots that could execute simple tasks and eventually collaborate on whole processes.

Heightened interest was generated last year when Yohei Nakajima created BabyAGI on top of ChatGPT and LangChain and published the code on GitHub. It was more a proof of concept rather than an enterprise-class tool with the appropriate trust and risk management guardrails. Since then, Rabbit has created an autonomous AI agent device and OS that quickly sold out. Dozens of other autonomous AI agent tools have been released, including AutoGPT, LlamaIndex, AgentGPT, MetaGPT, AutoChain, PromptChainer and PromptFlow. In addition, vendors such as Microsoft, OpenAI and UiPath are in the early stages of developing autonomous AI offerings.

Some of that initial excitement is subsiding, but interest is increasing in more mature copilot offerings, said Adnan Masood, chief AI and machine learning architect at consultancy UST. "Autonomous agents," he explained, "are the future due to LLM [large language model] breakthroughs enabling autonomy, signaling a huge shift from rule-based systems." Enterprises have yet to see how these new offerings will address critical issues like data security, auditing, transparency and keeping humans in the loop.

Autonomous agents will need to reliably perform complex context-dependent tasks, maintain long-term memory and address ethical situations and inherent biases. Early developments, Masood said, show promise in task automation and decision-making but often struggle in scenarios requiring deep contextual understanding -- mainly because of underlying LLM and compute limitations. There are also significant integration challenges with existing systems that can provide agents with necessary background knowledge. "Modern copilots are trying to address these limitations in an incremental manner for enterprise use cases," Masood said, "but there is a long way ahead."

When AI learns about us

Humans learned a lot about AI's potential in 2023, but 2024 will be the year of AI learning about us, conjectured Adam Burden, global innovation lead and chief software engineer at consultancy Accenture. New advances in AI are bringing human-like intelligence and abilities to businesses. "We've watched AI evolve from performing singular tasks to AI agents that with appropriate oversight can collaborate with one another and act as proxies for people and enterprises alike," Burden explained.

Many enterprises today are rolling out chatbots that handle multiple tasks. The goal, Burden said, is to develop an ecosystem of domain-specific agents optimized for different tasks. Accenture's "Technology Vision 2024" report found that 96% of executives worldwide agreed that AI agent ecosystems will represent "a significant opportunity for their organizations in the next three years."

"We believe that over the next decade, we will see the rise of entire agent ecosystems, large networks of interconnected AI that will push enterprises to think about their intelligence and automation strategy in a fundamentally different way," Burden said. He sees a future in which billions of autonomous agents connect with each other and perform tasks, significantly altering the landscape of commerce and customer care and amplifying everyone's abilities. AI, for example, is currently used to detect manufacturing flaws, but connected agents eventually could enable fully automated, lights-out production of goods at factories without requiring humans onsite. "This shift," Burden noted, "is driving intense interest in autonomous AI agents now."

AI agents are the next key milestone on the road to artificial general intelligence (AGI), Masood reasoned. He sees AI OSes that manage environments driven by the increase in AI hardware, data sets and LLM innovations. AI agents will understand and interact in natural language to enable and support more complex, intelligent and adaptive systems operating with a degree of autonomy previously unattainable.

"LLMs provide a natural, easy-to-use mechanism for getting answers to questions, including descriptions of how to perform tasks," added Karen Myers, lab director for the Artificial Intelligence Center at scientific research institute SRI International. Applying LLMs to autonomous AI agents, she said, is a logical next step.

Experimenting with autonomous AI

Although AI agents and their applications are just in the early stages of development, the following companies are experimenting with new autonomous AI frameworks:

- Microsoft's TaskWeaver is an experimental tool that integrates with LLMs and various agentic AI tools, including LangChain, Semantic Kernel, Transformers Agents, AutoGen and Jarvis.

- OpenAI is developing an Assistants API to support AI-assisted tasks running in the cloud or on devices like computers and phones.

- UiPath is developing Autopilot capabilities on top of its robotic process automation (RPA) infrastructure that promises to blend generative AI and specialized AI to run automated processes across the enterprise.

- Rabbit is developing a Large Action Model that learns and executes human actions on apps and services.

Earlier work on autonomous AI agents dates back to SRI's development of Shakey the Robot in 1966, Myers said. The focus was on creating an entity that could respond to assigned tasks by setting appropriate goals, perceiving the environment, generating a plan to achieve those goals and executing the plan while adapting to the environment. Shakey was designed to operate as an embedded system over an extended period, performing a range of different but related tasks.

Scaling this approach was difficult. Until recently, most methods for building autonomous agents required manual knowledge engineering efforts involving explicit coding of low-level skills and models to drive agent behavior. Machine learning was gradually used to learn focused elements such as object recognition or obstacle avoidance for mobile robots.

Recent innovations in applying LLMs to understand tasks have yielded an entirely different and more automated approach. The focus now is on synthesizing and executing a solution to a task instead of supporting a continuously operating agent that dynamically sets its own goals. These newer models are also designed so they can use LLMs for planning and problem-solving. "One fascinating aspect of the LLM approach is the iterative design in which solutions are experimentally tried and then the output critiqued to incrementally reach a satisfactory solution," Myers explained.

Masood sees these innovations as a departure from previous work on enterprise assistants that lacked adaptability, including expert systems, autonomous agents, intelligent agents, decision support systems, RPA bots, cognitive agents and self-learning algorithms. Autonomous agents that use LLMs are getting better at dynamic learning and adaptability, understanding context, making predictions and interacting in a more human-like manner. Agents therefore can operate with minimal human intervention and adapt to new information and environments in real time.

AI agents as co-workers

Yet when the time comes to deploy chatbot or LLM agent technologies more broadly without involving a human for validation, caution is required. They can't function autonomously in mission-critical settings, making trustworthiness critical, Myers said.

Businesses need to prioritize data security and ethical AI practices, Masood added. Companies also must develop mechanisms to ensure the decisions made by these autonomous agents align with organizational values and adhere to legal standards. That requires addressing issues surrounding algorithmic fairness, accountability, auditability, transparency, explainability, security, and toxicity and bias mitigation.

Companies must start democratizing access to data, Burden recommended. That might require rethinking data management practices like vectorizing databases, providing new APIs to access data and improving tools to work better with corporate systems. Additionally, decision-makers need to identify which agent ecosystems they'll want to create and participate in.

Burden sees it as a process that introduces humans to their future digital co-workers. Humans will have an important role in creating, testing and managing agents and deciding when and where internal agents should be allowed to run independently. "If you want to reinvent your AI strategy to tap into agent ecosystems, you need to reinvent your people strategy, too," Burden advised.

Although there's still a lot of work to do before AI agents can truly act on behalf of humans, it might be a good idea to get a head start and teach the workforce to reason with existing intelligent technologies. "The reality is that agents are not yet foolproof and still getting stuck, misunderstanding intent and generating inaccurate responses," Burden acknowledged. "Without the appropriate checks and balances, agents could wreak havoc on your business."

Autonomous AI's future

Autonomous AI can be viewed as an evolution of RPA, which is very focused on point tasks and still labor-intensive to develop. "LLM-based autonomous agents," Myers surmised, "have the potential to enable [RPA] automation to be built much more easily and for a much broader range of problems."

AI will eventually be powered by autonomous agents, leading to fully autonomous AI OSes that support more adaptable systems aligned with company goals, compliance, legal standards and human values, Masood predicted.

On the dark side, autonomous AI agents might fuel more resilient, dynamic and self-replicating malicious bots to launch DoS attacks, hack enterprise systems, drain bank accounts and undertake disinformation campaigns. Businesses also need to consider how human workers are affected, including roles and responsibilities.

"Agents will help build our future," Burden said, "but it's our job to make sure they build a future we want to live in."

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.