Claude AI vs. ChatGPT: How do they compare?

Wondering whether to use Anthropic's Claude or OpenAI's ChatGPT for your project? Explore how the two stack up against each other in terms of cost, performance and features.

In an increasingly crowded generative AI market, two early front-runners have emerged: Claude AI and ChatGPT. Developed by AI startups Anthropic and OpenAI, respectively, both products use some of the most powerful large language models currently available, but the two have some key differences.

ChatGPT is likely today's most widely recognizable LLM-based chatbot. Since its launch in late 2022, ChatGPT has attracted both consumer and business interest due to its powerful language abilities, user-friendly interface and broad knowledge base.

Claude, Anthropic's answer to ChatGPT, is a more recent entrant to the AI race, but it's quickly become a competitive contender. Co-founded by former OpenAI executives, Anthropic is known for its prioritization of AI safety, and Claude stands out for its emphasis on reducing risk.

While both Claude and ChatGPT are viable options for many use cases, their features differ and reflect their creators' broader philosophies. To decide which LLM is the best fit for you, compare Claude vs. ChatGPT in terms of model options, technical details, privacy and other features.

TechTarget Editorial compared these products using hands-on testing and by analyzing informational materials from OpenAI and Anthropic, user reviews on tech blogs and Reddit, and industry and academic research papers.

Claude AI vs. ChatGPT model options

To fully understand the options available to users, it's important to note that Claude and ChatGPT are names for chatbot products, not specific LLMs. When interacting with Claude or ChatGPT, users can choose to run different model versions under the hood, whether using a web app or calling an API.

Claude

In early March 2024, Anthropic released the Claude 3 model family, the first major update since Claude 2's debut in July 2023. The Claude 3 series includes three versions targeting different user needs:

- Claude 3 Opus. Opus, Anthropic's most advanced and costly model, is available to Claude Pro subscribers via the Claude AI web app for a $20 monthly fee or to developers via Anthropic's API at a rate of $15 per million input tokens and $75 per million output tokens. Anthropic recommends Opus for complicated tasks such as strategy, research and complex workflow automation.

- Claude 3 Sonnet. Positioned as the middle-tier option, Sonnet is available for free in the Claude AI web app and to developers via the Anthropic API at $3 per million input tokens and $15 per million output tokens. It's also available through the Amazon Bedrock and Google Vertex AI managed service platforms. Anthropic's recommended Sonnet use cases include data processing, sales and timesaving tasks such as code generation.

- Claude 3 Haiku. The cheapest model, Haiku, is available to Claude Pro subscribers in the web app and through Anthropic's API at $0.25 per million input tokens and $1.25 per million output tokens, as well as through Amazon Bedrock. Anthropic recommends using Haiku for tasks that require efficiency and quick response times, such as customer support, content moderation, and logistics and inventory management.

All Claude 3 models have an August 2023 knowledge cutoff and a 200,000-token context window, or about 150,000 English words. According to Anthropic, all three models can handle up to 1 million tokens for certain applications, but interested users will need to contact Anthropic for details. And although the Claude 3 series can analyze user-uploaded images and documents, it lacks image generation, voice and internet browsing capabilities.

ChatGPT

OpenAI provides a broader array of models than Anthropic, including multiple API options; two ChatGPT web versions; and specialized non-LLM models, such as Dall-E for image generation and Whisper for speech to text. OpenAI's main LLM offerings are GPT-4 and GPT-3.5:

- GPT-4. Powering the latest iteration of ChatGPT, GPT-4 is OpenAI's most advanced model, with capabilities including image generation, web browsing, voice interaction and context windows ranging up to 128,000 tokens. Users can also create custom assistants called GPTs using GPT-4. It's accessible through OpenAI's paid plans -- Plus, Team and Enterprise -- and has knowledge cutoffs up to December 2023.

- GPT-3.5. The model behind the first iteration of ChatGPT, GPT-3.5 powers the free version of the web app. Although generally faster than GPT-4, GPT-3.5 has a smaller context window of 16,385 tokens and an earlier knowledge cutoff of September 2021. It also lacks its newer counterpart's multimodal and internet-browsing capabilities.

Individual users can access GPT-3.5 for free, while GPT-4 is available through a $20 monthly ChatGPT Plus subscription. OpenAI's API, Team and Enterprise plans, on the other hand, have more complex pricing structures. API pricing varies by model, including fine-tuning, embedding and base language models, as well as coding and image models. Team and Enterprise plan pricing depends on seat count and annual vs. monthly billing frequency. For a more in-depth comparison, including API options, see our detailed GPT-3.5 vs. GPT-4 guide.

Architecture and performance

OpenAI and Anthropic remain tight-lipped about their models' specific sizes, architectures and training data. Both Claude and ChatGPT are estimated to have hundreds of billions of parameters; a recent paper from Anthropic suggested that Claude 3 has at least 175 billion, and a report by research firm SemiAnalysis estimated that GPT-4 has more than 1 trillion. Both also use transformer-based architectures, enhanced with techniques such as reinforcement learning from human feedback.

To evaluate and compare models, users often turn to benchmark scores and LLM leaderboards, which measure AI language models' performance on various tasks designed to test their capabilities. Anthropic, for example, claims that Claude 3 surpassed GPT-4 on a series of benchmarks, and its Opus model recently became the first to outperform GPT-4 on the leaderboard Chatbot Arena, which crowdsources user ratings of popular LLMs.

User-generated rankings such as Chatbot Arena's tend to be more objective, but benchmark scores self-reported by AI developers should be evaluated with healthy skepticism. Without detailed disclosures about training data, methodologies and evaluation metrics -- which companies rarely, if ever, provide -- it's challenging to verify performance claims. And the lack of full public access to the models and their training data makes independently validating and reproducing benchmark results nearly impossible.

Especially in a market as competitive as the AI industry, there's always a risk that companies will selectively showcase benchmarks that favor their models while overlooking less impressive results. Direct comparisons are also complicated by the fact that different organizations might evaluate their models using different metrics for factors including effectiveness and efficient resource use.

Ultimately, Claude and ChatGPT are both advanced chatbots that excel at language comprehension and code generation, and most users will likely find both options effective for most tasks -- particularly the most advanced options, Opus and GPT-4. But details about models' training data and algorithmic architecture remain largely undisclosed. While this secrecy is understandable given competitive pressures and the potential security risks of exposing too much model information, it also makes it difficult to compare the two directly.

Privacy and security

Anthropic's company culture centers on minimizing AI risk and enhancing model safety. The startup pioneered the concept of constitutional AI, in which AI systems are trained on a set of foundational principles and rules -- a "constitution" -- intended to align their actions with human values.

Anthropic doesn't automatically use users' interactions with Claude to retrain the model. Instead, users actively opt in -- note that rating model responses is considered opting in. This could be appealing for businesses looking to use an LLM for workplace tasks while minimizing exposure of corporate information to third parties.

Claude's responses also tend to be more reserved than ChatGPT's, reflecting Anthropic's safety-centric ethos. Some users found earlier versions of Claude to be overly cautious, declining to engage even with unproblematic prompts, although Anthropic promises that the Claude 3 models "refuse to answer harmless prompts much less often." This abundance of caution could be beneficial or limiting, depending on the context; while it reduces the risk of inappropriate and harmful responses, not fulfilling legitimate requests also limits creativity and frustrates users.

Unlike Anthropic, OpenAI retrains ChatGPT on user interactions by default, but it's possible to opt out. One option is to not save chat history, with the caveat that the inability to refer back to previous conversations can limit the model's usefulness. Users can also submit a privacy request to ask OpenAI to stop training on their data without sacrificing chat history -- OpenAI doesn't exactly make this process transparent or user-friendly, though. Moreover, privacy requests don't sync across devices or browsers, meaning that users must submit separate requests for their phone, laptop and so on.

Similar to Anthropic, OpenAI implements safety measures to prevent ChatGPT from responding to dangerous or offensive prompts, although user reviews suggest that these protocols are comparatively less stringent. OpenAI has also been more open than Anthropic to expanding its models' capabilities and autonomy with features such as plugins and web browsing.

Additional capabilities

Where ChatGPT really shines is its extra functionalities: multimodality, internet access and GPTs. Users will need some form of paid access to take advantage of these features, but a subscription could be worthwhile for regular heavy users.

With GPT-4, users can create images within text chats and refine them through natural language dialogues, albeit with varying degrees of success. GPT-4 also supports voice interactions, enabling users to speak directly with the model as they would with other AI voice assistants, and can search the web to inform its responses. Anthropic's Claude can analyze uploaded files, such as images and PDFs, but does not support image generation, voice interaction or web browsing.

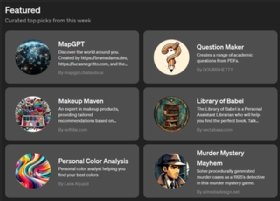

Another unique ChatGPT feature is GPTs: a no-code way for users to create a customized version of the chatbot for specific tasks, such as summarizing financial documents or explaining biology concepts. Currently, OpenAI offers a selection of GPTs made by OpenAI developers as well as an app store-like marketplace of user-created GPTs.

Users create GPTs using a text-based dialogue interface called the GPT Builder, which transforms the conversation into a command prompt for the new GPT. Once creation is complete, users can keep their GPTs private, share them with specific users or publish them to the OpenAI GPT marketplace for broader use.

At least for now, users might find limited value in the GPT marketplace due to a lack of vetting. User ratings of GPTs vary widely, and some GPTs seem primarily designed to funnel users to a company's website and proprietary software. Other GPTs are explicitly designed to bypass plagiarism and AI detection tools -- a practice that seemingly contradicts OpenAI's usage policies, as a recent TechCrunch analysis highlighted.

While Anthropic doesn't have a direct GPT equivalent, its prompt library has some similarities with the GPT marketplace. Released at roughly the same time as the Claude 3 model series, the prompt library includes a set of "optimized prompts," such as a Python optimizer and a recipe generator, presented in the form of GPT-style persona cards.

While Anthropic's prompt library could be a valuable resource for users new to LLMs, it's likely to be less helpful for those with more prompt engineering experience. From a usability perspective, the need to manually reenter prompts for each interaction or use the API, as opposed to selecting a preconfigured GPT in ChatGPT, presents another limitation.

Although OpenAI's GPTs and Anthropic's optimized prompts both offer some level of customization, users who want an AI assistant to perform specific tasks on a regular basis might find purpose-built tools more effective. For example, software developers might prefer AI coding tools such as GitHub Copilot, which offer integrated development environment support. Similarly, for AI-augmented web search, specialized AI search engines such as Perplexity could be more efficient than a custom-built GPT.

Lev Craig covers AI and machine learning as the site editor for TechTarget Editorial's Enterprise AI site. Craig graduated from Harvard University with a bachelor's degree in English and has previously written about enterprise IT, software development and cybersecurity.